Contribute to keeping information on Free & Open Communications systems available for all.

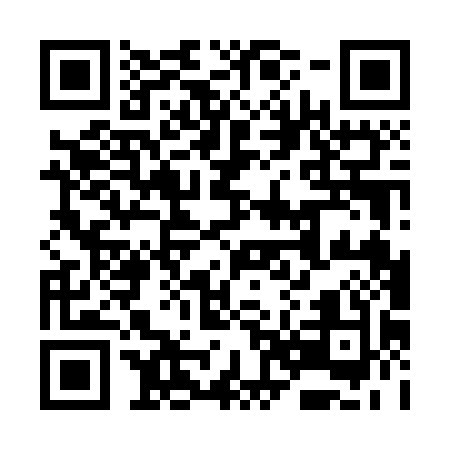

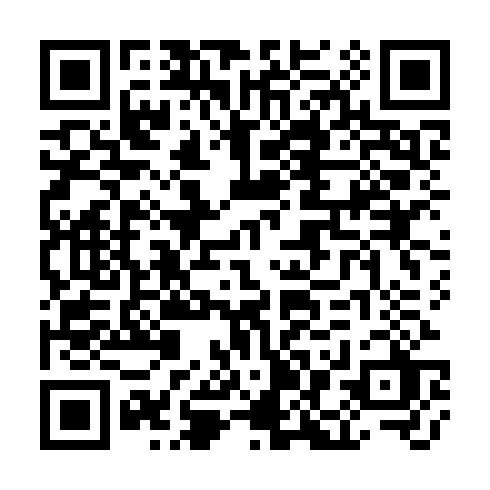

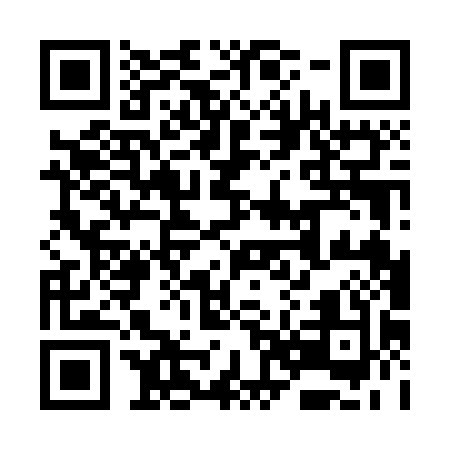

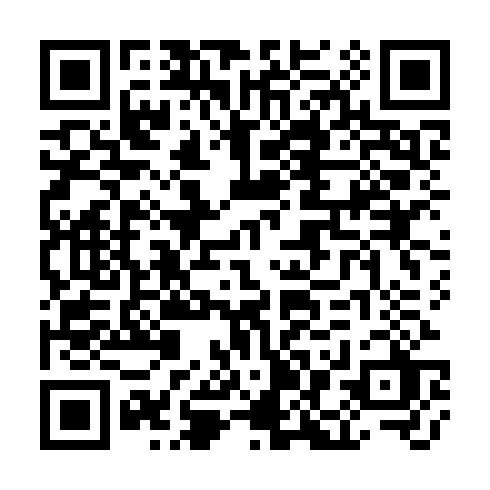

You can donate directly to unsigned.io by using one of the following QR codes:

Monero

Bitcoin

Ethereum

In the latest commits to master (version 0.9.3) of RNS, there's a few interesting (and pretty experimental) features that I think some people might want to play around with already, even though it hasn't been released yet.

First of all, a long-standing TODO has been implemented in AutoInterface, which will now sub-interface each discovered Ethernet/WiFi peer for much better performance and path discovery. AutoInterface now also supports dynamic link MTU discovery. These updates improve performance significantly.

Most "experimentally", and perhaps most interestingly, RNS now includes an optional shim for on-demand transpilation of the entire RNS implementation to C, and then compiling that to machine-local object code at run-time. If requested, this happens dynamically at daemon initialisation via Cython (and only once, of course, if no locally compiled version exists already).

The on-demand compilation step, by itself, provides an instant performance increase of around 2x in the Reticulum transport core, cutting per-packet processing time approximately in half, due to the much decreased need for context switches between the Python VM and C-based backend. Notably, this is without any of the many potential optimisations added yet, such as static typing of variables in the transport core, object property slotting, logic vectorisation and so on.

These optimisations, together with link MTU discovery now pushes the Reticulum transport core packet processing above 1.4 gigabits per second on my (relatively modest) test hardware, while still running on a single CPU core.

I've never really expanded much on how I envision the path to truly massive scalability for Reticulum before, and understandably most of the community has been focusing efforts in this regard on the tried-and-true approach of developing parallel implementations of Reticulum in compiled languages such as C, Rust or Go. That approach is something I very much support and value, and even a necessary one for wider availability and adoption, so please keep up the good work! But, it is not the area I will be focusing my own efforts on, and there's some interesting, but non-obvious reasons for that, so it's probably time to expand a bit on that.

One of the most critical components in the continued evolution of Reticulum right now, is keeping the reference implementation readable, understandable, accessible and auditable. Without those qualities present, creating alternative implementations - and systems on top of Reticulum - are just too tall an order to expect anyone to care about. At the same time, Reticulum targets very demanding requirements, both in terms of security, privacy and performance (both in the context of very fast links and very slow links). Actually resolving those requirements into a real, functional system is bizarrely complex, to say the least, and honestly I do occasionally wonder how I even got this far.

From the outside, it's understandably difficult to appreciate just how much design, testing, re-evaluation, validation and implementation work it has taken to get to the point we're at now. It's taken me ten years, and let's just say that it truly has been a bizzarely complex journey. For solving tasks like this, a high-level language like Python is an absolutely excellent tool, and had I started out in C (even though that is my personal "favourite language"), I don't think I'd have succeeded.

Before discussing any further, I should clarify what massive scalability actually entails in regards to Reticulum. The current implementation is capable of handling throughputs in excess of 1 Gbps, which is definitely "good enough" for the foreseeable future. But down the line, and in the relatively near future, I think we need to start moving the target to transport capabilities of 100+ Gbps and network depths of millions (or even billions) of active endpoints.

There's a common misconception that Python is "slow", whatever is meant by that. From a more nuanced perspective, that assumption is incorrect. What can cause significant performance penalties in Python are the context switches between the Python byte-code VM and native machine code. But as I've already hinted at, those can be eliminated almost entirely by using the Python source code as a blueprint for deterministically generating machine-code, that run at native speeds. I'd estimate that simply implementing static typing, property slotting and locking the memory structures for various lookup tables will provide a further 30-50x increase in performance.

With the recent release of Python 3.13, we're also finally starting to be see the end of the notorious Global Interpreter Lock, allowing us true multi-core concurrency, and this along with the new Python JIT will speed up things even further.

But going beyond that, we're going to start needing to get a little creative. It's well-known that the TCP/IP stack is fast, but one of the primary reasons that this holds true in practical reality is a little less well-known.

On commercially available IP routers, be that consumer products or ISP-scale hardware, almost all of the packet processing doesn't actually occur on the CPU, but is hardware accelerated on custom ASICs, embedded in the router chipset. If you've ever tried bonding IP interfaces or inserting software based VPNs in your routing path on systems that provide no hardware acceleration for those operations, you will have seen your previous line-rates drop to significantly less impressive numbers.

There's not any real possibility of hardware vendors starting to design, fab and ship Reticulum-specific acceleration ASICs any time soon, so we can't rely on that approach. What we can rely on instead, is another type of general-purpose parallelisation solution, that has seen massive improvements in the recent years, and which is now more or less ubiquitous in all compute devices, the GPU. Even mobile chipsets and embedded SBCs include capable GPUs with quite ample memory and shader core counts.

By leveraging existing, general-purpose acceleration frameworks (such as OpenCL or Vulkan Compute), a truly general-purpose Reticulum packet-processing accelerator can be designed and deployed on more or less any existing system, essentially for free - at least in the sense that no new hardware has to be invented.

If active path tables and other transport-essential data structures are shipped off to GPU memory, and the internal states of the Reticulum transport core is made completely vectorisable, packet processing can truly be massively parallelised. The transport logic was already designed with such vectorisation in mind, but of course it will take some work to get there.

The beauty of this approach is that it will still work on any type of CPU as well, even if no acceleration hardware is present on the system. This allows for a single implementation that will dynamically adapt to whatever resources are available on the system, from a single-core SBC, to an accelerated core transport node capable of handling tens of millions of packets per second.

While all of this is immensely interesting, and something I'd love to sink myself into right now, realistically it's still something a ways off into the future, since there's plenty of other important work to do first. Still, I thought it would be good to share some of thoughts that form the basis of the current optimisation work, and where it is ultimately going.